The Engineering Marvel Behind the Next Generation of Wearables

The Backstory: From Calculator Watches to the Quantified Self

The idea of wearable technology is not new.

Its roots go back to experimental shoe-mounted computers in the 1960s and calculator watches in the 1980s. These devices hinted at a future where technology could live on the body, but they remained novelties rather than companions.

The real shift arrived in the mid-2010s with the rise of the Quantified Self movement. Wearables became mainstream tools for tracking steps, sleep cycles, heart rate, calories, and stress. Technology stopped being something we used occasionally and started becoming something we wore daily.

But there was a problem.

These devices still felt like technology. They demanded attention, buzzed for relevance, and constantly pulled users back into screens. We were more informed, but also more distracted.

The next generation of wearables is not about adding more data.

It is about removing friction.

The Present: The Rise of Ambient Computing

By 2026, wearable technology has entered the era of Ambient Computing.

The goal is no longer to place another screen on the body.

The goal is to let technology work quietly in the background.

Modern wearables are powered by Multimodal AI, meaning they combine visual input, audio signals, motion tracking, and biometric data to understand context in real time. Instead of tapping, typing, or searching, users look, speak, or move naturally and the system responds.

We are moving from searching for information to information finding us.

This is where wearables stop feeling like gadgets and start feeling like lifestyle infrastructure.

Case Study 1: Ray-Ban Meta Glasses

When AI Gets Eyes

The Ray-Ban Meta Glasses represent one of the most important shifts in AI wearables, not because of how powerful they are, but because of how normal they feel.

They look like everyday glasses.

The experience feels simple.

You are walking through a city, look at a menu written in French, and say

Hey Meta, translate this.

You are hiking, see a plant, and ask

Hey Meta, what kind of plant is this.

No phone. No typing. No visible interaction ritual.

What feels effortless is powered by a carefully orchestrated infrastructure.

Under the Hood: The Three Tier Infrastructure Behind Smart Glasses

1. The On-Device “Edge” Power

At the heart of the Ray-Ban Meta glasses lies the Qualcomm Snapdragon AR1 Gen 1 platform.

- The Processor: This is the first dedicated chip designed specifically for sleek smart glasses. It handles high-quality image processing and on-device AI without overheating the frames sitting on your face.

- Storage & RAM: With 32GB of internal storage, the device can handle hours of 3K video and 12MP photos locally before needing to sync.

- Sensors: A 5-microphone array uses beamforming technology to isolate your voice from background noise, while open-ear speakers use directional audio to keep your calls private.

2. The Smartphone Bridge

The glasses aren’t a standalone computer – they use your phone as a “Co-Processor.” Through the Meta View App, the glasses offload heavy data tasks to your smartphone via Wi-Fi 6 and Bluetooth 5.3. This “hybrid processing” is what allows the battery to last through the day while still performing complex tasks.

3. The Cloud & Llama AI

When you ask, “Hey Meta, look at this monument and tell me its history,” the infrastructure shifts to the cloud.

- The glasses capture the frame, The phone uploads it, Meta’s Llama 4 (or latest multimodal model) analyzes the pixels and the answer is beamed back to your ears in milliseconds.

- Latency Optimization: Meta uses “Speculative Processing” to predict what you might ask next, reducing that awkward “loading” pause

Only selectively processed data flows to the cloud. Continuous raw feeds are avoided to optimize latency, bandwidth, and privacy.

The Data Pipeline That Makes It All Work

Every AI wearable follows a structured data pipeline:

- Sensors capture raw visual, audio, motion, and biometric signals

- On-device systems preprocess and filter the data

- The smartphone aggregates and enriches context

- Cloud AI performs reasoning and synthesis

- Insights return as audio, subtle visuals, or haptic feedback

Latency is not an optimization. It is a core design constraint.

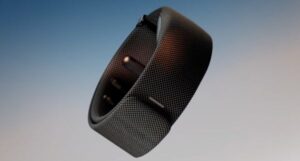

Case Study 2: Smart Rings

The Quiet Powerhouse of Bio-Tracking

If smart glasses give AI eyes, Smart Rings give it internal awareness.

Devices like the Oura Ring and Samsung Galaxy Ring operate quietly in the background.

They continuously track:

- Heart rate variability

- Sleep quality

- Body temperature

- Stress and recovery

- Long-term physiological trends

The real innovation is the AI interpretation layer. Unlike glasses, rings focus on Ultra-Low-Power MCUs (Microcontroller Units). They use infrared photoplethysmography (PPG) sensors to see through your skin, tracking heart rate and oxygen without the power drain of a screen.

Instead of dashboards full of numbers, these systems provide energy scores, recovery insights, and early burnout signals.

This is bio-tracking without distraction.

Neural Bands: When Intent Becomes Input

Beyond glasses and rings lies the next frontier of wearable technology: Neural Bands (sEMG). It reads electrical motor nerve signals at the wrist.

These wearables detect:

- Micro muscle signals

- Subtle neural intent

- Gesture patterns with minimal movement

Neural bands allow users to control digital systems through intent rather than physical interaction.

No keyboard.

No mouse.

No screen.

Wearables as a Distributed Human Nervous System

Taken together, modern wearables form a system that mirrors biological intelligence.

- Glasses interpret the environment

- Rings interpret the body

- Neural bands translate intent

- Smartphones coordinate

- Cloud AI connects patterns over time

This is distributed intelligence, not gadget overload.

Invisibility, Safety, and Awareness

As wearables blend into daily life, sensing becomes less visible.

Just as luxury stores normalize discreet security systems, wearable infrastructure normalizes ambient sensing.

The challenge is not stopping this shift.

It is ensuring transparency and trust as technology disappears.

As these devices like the Ray Ban Meta glasses go viral, they’ve hit a wall of social friction – specifically in security and privacy.

The “Capture LED” Controversy

Every pair of Ray-Ban Metas has a white LED that must shine when recording. However, we’ve seen the rise of “LED Modding” – where users try to cover or disable the light with stickers or software hacks.

The Luxury Retail Response

This has led to a fascinating technological “arms race” in high-end retail.

- Detection Systems: Luxury boutiques in London and Dubai are now installing Optic Sensors at their entrances. These sensors look for the specific “glint” of a camera lens or the electromagnetic signature of smart glasses.

- The Digital Fence: Some high-security zones are experimenting with Local Jamming or “Privacy Shields” that use infrared light to “blind” camera sensors, preventing unauthorized filming of exclusive collections or private clients.

The Future, What Comes Next : From “Wearing” Tech to “Living” Tech

In the next 24 months, the infrastructure will move toward On-Device LLMs. We won’t need the cloud for basic tasks. Your glasses will be able to recognize your coworkers and remind you of their names entirely offline, ensuring that your “vision data” never leaves the device.

The future of wearable technology includes:

- Smart textiles tracking posture and muscle fatigue

- Predictive health systems that surface issues before symptoms

- Neural interfaces that reduce reliance on screens

- Context-aware AI that adapts automatically

The most powerful technology will not demand attention.

It will quietly support life.

Frequently Asked Questions

- What is Ambient Computing?

Ambient Computing refers to technology that works in the background, responding to voice, vision, movement, or biometric signals instead of screens.

- How do Ray-Ban Meta Glasses use AI?

They use a three-tier system combining on-device processing, smartphone coordination, and cloud-based AI like LLaMA.

- Are smart glasses always recording?

No. Recording is user-activated and indicated through visible LED lights.

- What is the difference between smart rings and smartwatches?

Smart rings focus on passive bio-tracking and recovery, while smartwatches focus on interaction and notifications.

- Do smart rings require subscriptions?

Some brands do, while others offer full functionality without subscriptions.

- What are Neural Bands?

Neural bands are wearables that translate neural or muscle signals into digital input, enabling screenless interaction.

- Do wearables send all data to the cloud?

No. Modern systems process most data locally or on the phone before selectively syncing insights.

- Can wearable tech predict health issues?

Yes. Long-term pattern analysis enables early detection of stress and health anomalies.

- Is wearable tech safe for everyday use?

Yes. Devices are designed with strict safety, power, and thermal standards.

- 10. Can I use smart glasses if I have a prescription?

Absolutely. One of the reasons the Ray-Ban Meta partnership is successful is because they are designed to be fitted with high-quality prescription lenses, making them a functional tool for daily vision.