As systems become smarter, the consequences of weak data, AI, or security grow exponentially.

For years, organizations treated data, artificial intelligence, and security as separate domains. Data teams focused on pipelines and analytics. AI teams focused on models and experimentation. Security teams operated alongside, ensuring compliance and protection.

That separation no longer works.

In modern systems, data fuels AI, AI amplifies decisions, and both introduce security risks that cannot be managed in isolation. When these layers evolve independently, small gaps compound into large failures.

Today, the most serious incidents rarely occur because one component breaks. They happen because the connections between data, AI, and security were never designed together.

Data as the Foundation of AI Systems

Every AI system is only as reliable as the data it consumes.

Data determines what AI learns, how it behaves, and where it fails. Poor data quality, fragmented sources, or unclear ownership do not just reduce accuracy – they introduce systemic risk that scales with automation.

As AI becomes embedded into core workflows, data stops being a supporting asset and becomes critical infrastructure. Decisions about how data is collected, shared, and governed directly affect trust, risk, and outcomes.

This is why organizations that treat data casually often see AI systems amplify existing weaknesses rather than create value.

How AI Increases Speed and Scale of Decisions

AI fundamentally changes how decisions are made.

Traditional systems supported human-paced decisions. AI-driven systems operate continuously, often in real time, across massive volumes of data. Decisions are faster, broader in impact, and harder to reverse.

This increased speed and scale means:

-

errors propagate faster

-

biased or incorrect data spreads across systems

-

security gaps are exploited at machine speed

At companies like Microsoft , this reality has driven a shift toward embedding security and governance directly into cloud and AI platforms, rather than treating them as downstream concerns. As AI accelerates decisions, safeguards must be built into the system itself.

Security as a Design Requirement, Not an Add-On

In modern architectures, security cannot be layered on later.

Security now spans how data is collected, stored, processed, and exposed to AI models. It also includes how outputs are consumed and acted upon.

This goes beyond perimeter defense. Security today includes:

-

access control and identity

-

data integrity and lineage

-

model exposure and misuse prevention

-

continuous monitoring and auditability

Organizations like Apple demonstrate how privacy-first data handling shapes AI system design. By limiting data exposure and emphasizing controlled processing, Apple shows that security and data choices directly influence trust and long-term adoption.

Trust, Governance, and Access Control

As AI systems rely on increasingly sensitive enterprise data, trust becomes a system requirement, not a policy goal.

Trust is built through:

-

clear data ownership

-

consistent data governance

-

controlled access to data and models

-

traceability across decisions and outputs

Without governance, organizations struggle to explain why systems behave the way they do. Without access control, sensitive data and AI outputs become vulnerable. Over time, this erodes both internal confidence and external credibility.

The Risk of Siloed System Design

Many failures occur not because individual components are flawed, but because data, AI, and security were designed in silos.

When teams operate independently:

-

data pipelines evolve without security context

-

AI models are built without full visibility into data risks

-

security teams react after systems are already live

This fragmentation creates blind spots. AI systems end up operating on data they should not access, or producing outputs that cannot be governed effectively.

The GiSax Perspective

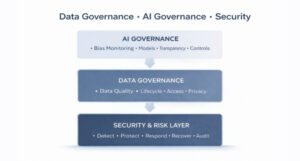

At gisax.io, we see data, AI, and security as interdependent system layers rather than isolated capabilities. Decisions made at the data layer directly influence AI behavior, and both shape the overall security posture.

From our experience, the highest risks emerge when these layers evolve independently. Designing them together creates clarity, accountability, and resilience as systems scale.

Case Study: Equifax , When Data and Security Fail at Scale

A clear example of what happens when data and security are not treated as foundational system layers is the Equifax breach.

Equifax handled vast volumes of sensitive personal data, but weaknesses in security and governance led to one of the largest data breaches in history. The impact extended far beyond technical remediation. Trust erosion, regulatory scrutiny, and long-term reputational damage followed.

This incident highlights a broader lesson: when data is treated as an asset but not as infrastructure, and security is treated as an afterthought, the consequences persist long after systems are repaired.

Conclusion : Why This Convergence Matters

As organizations increasingly rely on AI-driven systems, the cost of fragmentation grows.

Treating data, AI, and security as separate concerns leads to systems that are powerful but fragile. Designing them together creates systems that are intelligent, reliable, and trustworthy.

This convergence is no longer optional. It is a foundational requirement for modern digital systems.

FAQs

-

What is the relationship between data and AI? AI systems depend on data to learn, predict, and make decisions.

-

Why is data important for artificial intelligence? Data quality directly affects AI accuracy and reliability.

-

How does AI increase security risks? AI scales decisions, amplifying the impact of data or security flaws.

-

Why is security important in AI systems? AI systems often process sensitive data and automate decisions.

-

What is data governance? It defines how data is owned, accessed, and managed.

-

How does poor data quality affect AI? It leads to biased or unreliable outputs.

-

What is AI governance? Frameworks that ensure responsible AI behavior.

-

Why should security be built into AI systems? Post-deployment fixes are slower and riskier.

-

What are the risks of siloed system design? Blind spots, weak controls, and higher exposure.

-

How does access control protect data? It restricts who can view or use sensitive information.

-

What is data lineage? Tracking where data comes from and how it moves.

-

How do AI models use sensitive data? To generate predictions or insights.

-

Why is trust important in AI systems? Trust determines adoption and longevity.

-

How does compliance relate to AI? Regulations require transparency and accountability.

-

Why do AI systems fail in production? Often due to data drift or governance gaps.

-

What is secure data architecture? Architecture designed with security at every layer.

-

How does privacy affect AI design? Privacy limits how data can be collected and used.

-

Can AI work without large datasets? Sometimes, but data remains critical.

-

Why is system design important for AI security? Risks emerge at integration points.

-

What is the future of data, AI, and security? They will increasingly be designed as one system.